It is widely accepted that Artificial Intelligence (“AI”) is going to play a defining role in the future of the financial services industry. Recognizing this, over the past year the Financial Industry Regulatory Authority (“FINRA”) has engaged with market participants to better understand the use of AI in the securities industry and its implications for FINRA regulation, with the resulting whitepaper published in June 2020.

Lab49 has responded to the whitepaper, arguing that regulators and capital market participants need to take a holistic view of AI capabilities to maintain confidence in the decisions made or assisted by AI. The response outlines a capability model for AI and identifies five capabilities where regulatory guidance is most needed: risk management, model explainability, data provenance, data quality, and semantic models.

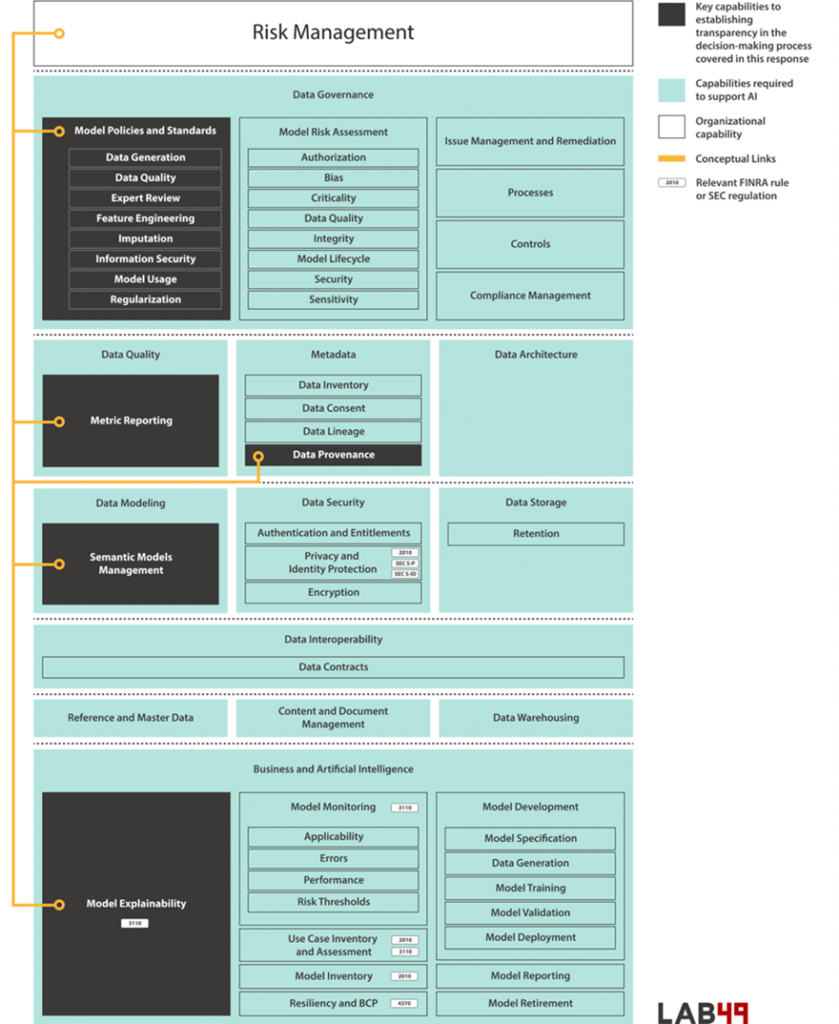

Risk management

Adopting AI has implications for the risk management function of capital market participants and requires careful consideration and management.

There is a range of risk areas relevant to the use of AI, including bias, data quality and security, to name a few. Failure in any of these areas may present legal, financial, operational or reputational risk to firms. It is vital that these firms implement a comprehensive policy framework that addresses all areas of risk. Policy is not the only lever to manage risk as firms should complement policies with standards, processes, and controls.

Two critical processes for consideration are Model Risk Assessment and Use Case Risk Assessment.

Model Risk Assessment identifies the risks relevant to use of AI models to help establish appropriate controls. Understanding how a model will behave in all situations will mitigate model risk. Similar to requirements for financial risk modelling, outcomes from AI models should be validated against a range of real and generated data sets.

Use Case Risk Assessment links use cases to their associated models and risks, which allows firms to identify issues early in the development lifecycle, reducing cost and impact. By maintaining a use case inventory, firms and regulators can also benefit from having an auditable data set of historical decisions that can be reviewed and revisited as policy and regulatory changes occur.

Model explainability

FINRA member firms are obligated to provide fair and ethical decisions to their customers, along with investment recommendations that are suitable and free of conflicting interests. Whether these decisions are manual or automated, customers must be able to trust that the financial advice they receive suits their objectives and risk profile.

Compared to other decision models – for example, those based on linear or logistic regression, or rules engines – AI-driven modelling techniques can be challenging to understand. And the relationship between model performance and complexity means that to improve accuracy AI models necessarily become more complex and therefore even harder to understand. But it is not just the case that firms are unable to clearly explain their AI models: sometimes firms are simply unwilling to provide detailed model explanations, as this risks disclosing proprietary intellectual property or opening the models up to adversarial attacks designed to trick AI models.

Nonetheless, firms that want to mitigate financial, operational and reputational risk should be prepared to demonstrate that investment advice augmented by AI is both suitable and free from conflicts of interest. From individuals clients requiring explanations for specific recommendations through to compliance teams that require audits of AI models to ensure that obligations are met, there are numerous examples where it is vital that FINRA member firms explain their AI models clearly.

We suggest that FINRA guidance cover both global and local explanation patterns. In terms of global explanations, FINRA should require transparency in model development, which ensures that stakeholders have visibility into the methods and techniques utilized to manage data, train models, and deploy them to production. A test-driven approach that not only assesses model performance at a global level, but also employs targeted tests for bias and conflict of interest, will give stakeholders confidence that models meet the necessary standard. Local explanation patterns should have the capability to provide explanations for discrete model output, which means individual clients can be provided with the rationale for decisions and recommendations. Accuracy in local explanation is paramount as discrepancies may lead to reputational risk with financial consequences.

To ensure these obligations are met, model governance should be embedded throughout the software development life cycle. Both in the specification and development of models, and the ongoing testing, deployment and operation, member firms should be guided by FINRA on how to establish governance practises that meet regulatory obligations while allowing the pursuit of the strategic benefits of AI.

Regulators and capital market participants need to take a holistic view of AI capabilities to maintain confidence in the decisions made or assisted by AI.

Data Provenance, Data Quality and Semantic Models

The use of standards that address data provenance, data quality and semantic models are important when providing rationale for decisions or recommendations that leverage AI.

Provenance describes the origins and history of a specific piece of data. Who owns provenance information, when in the lifecycle it is captured, what granularity of provenance information is needed, where it is stored, and how it is integrated are all essential considerations. Capital market participants should consider the use of open standards such as PROV to allow integration of provenance information in complex technology landscapes.

Confidence in AI decision-making is also influenced by awareness of the quality of data. Data quality is measured, monitored and reported across many dimensions including accuracy, completeness, consistency, timeliness availability and fitness for use. Open data quality vocabularies, such as DQV , should be considered to report data quality metrics in a machine-readable way that can be linked to provenance information and definitions of data.

Finally, the evaluation of provenance and quality of data depends on shared meaning. Concepts should be defined in an interoperable and reusable way using agreed glossaries, taxonomies and thesauri, and ontologies. Lab49 suggests that firms consider the use of Semantic Web and Linked Data standards, including Reference Data Framework, Reference Data Framework Schema, Web Ontology Language and Simple Knowledge Organization Systems.

Establishing and maintaining trust

Regulators can play a leading role in the adoption of AI by capital market participants by following a capability-based approach to supervisory guidance. Focusing on capabilities outlined above will help establish and maintain trust in the decisions made or assisted by AI.

Reach out to the team at [email protected] to find out how Lab49 can help your business establish and optimize AI capabilities to increase the value of data.